Binary Russian Roulette: Identifying Leaked Ed25519 Private Keys in Bare Metal Code

Abstract

This page is a walkthrough for St. John's, the second in a recent series of reverse engineering challenges from the embedded systems division at NCC Group.

Executive Summary

[These] levels will let you discover and exploit some bootloader-based vulnerabilities we have discovered in the past. — NCC Group

This write-up, and the two following ones in the series, involve exploiting flaws in glorified secure boot implementations.

Overview

First Working Exploit: 0326 UTC, February 14th, 2023Blockchain Timestamp: 0526 UTC, February 14th, 2023

Pastebin Timestamp: pastebin.com/RDdgZe4t

Cryptographic Proof of Existence: solution.txt solution.txt.ots

Solve Count At Time Of Writing: 53

Solves Per month: 4.14

Reading Time: 14 minutes

Rendering Note:

There is a known issue with Android lacking a true monotype system font, which breaks many of the extended ASCII character set diagrams below. Please view this page on a Chrome or Firefox-based desktop browser to avoid rendering issues. Ideally on a Linux host.

Background

The following is a walkthrough for the second in the new series of Microcorruption challenges. The original CTF-turned-wargame was developed a decade ago by Matasano and centered around a deliberately vulnerable smart lock. The goal for each challenge was simple: write a software exploit to trigger an unlock.

NCC Group later acquired Matasano. They continued maintaining the wargame and added half a dozen new challenges on October 28th, 2022, of which this is one.

System Architecture

The emulated device runs on the MSP430 instruction set architecture. It uses a 16-bit little-endian processor and has 64 kilobytes of RAM. The official manual includes the details, but relevant functionality is summarized below.

Interface

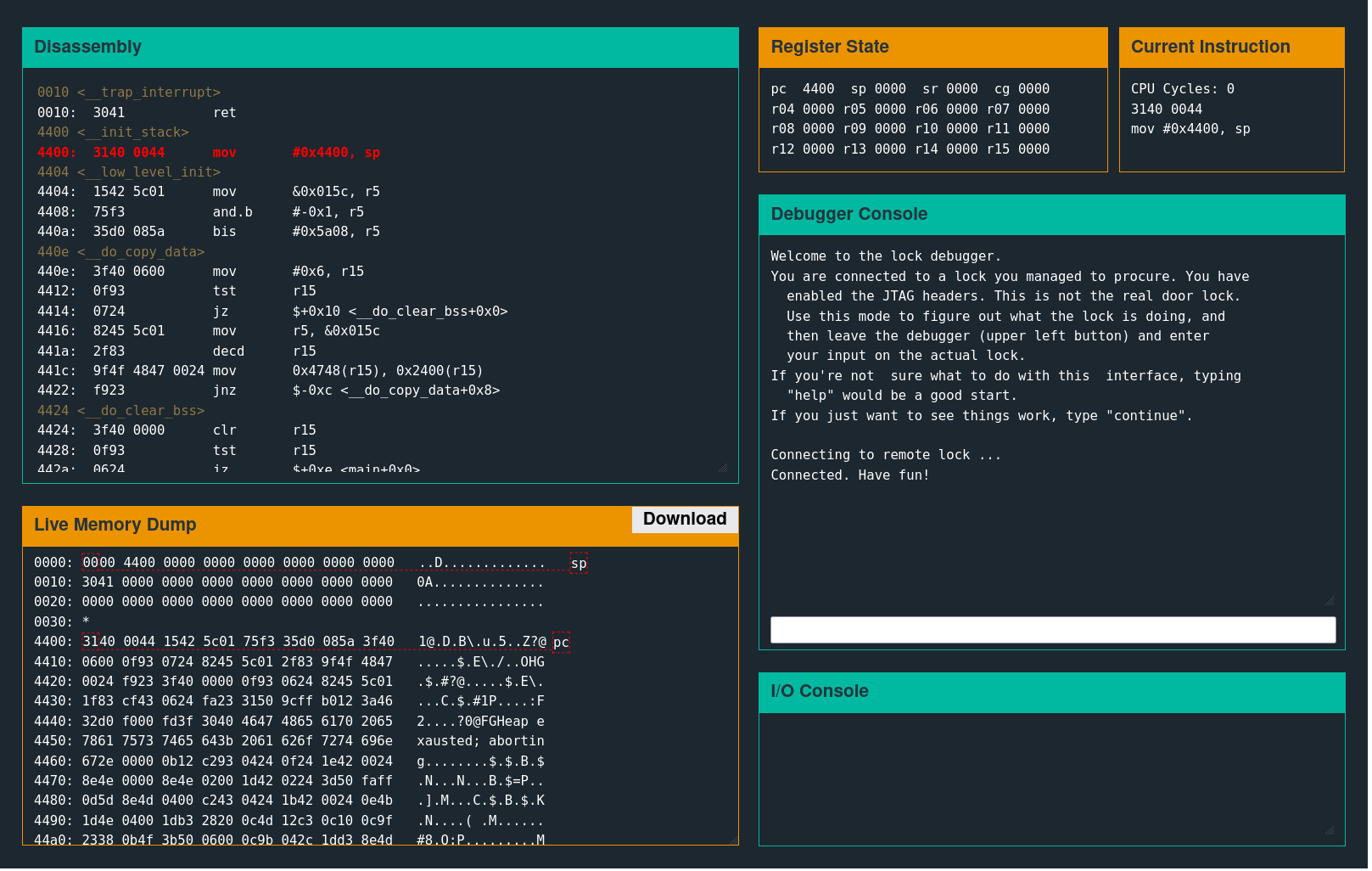

Several separate windows control the debugger functionality.

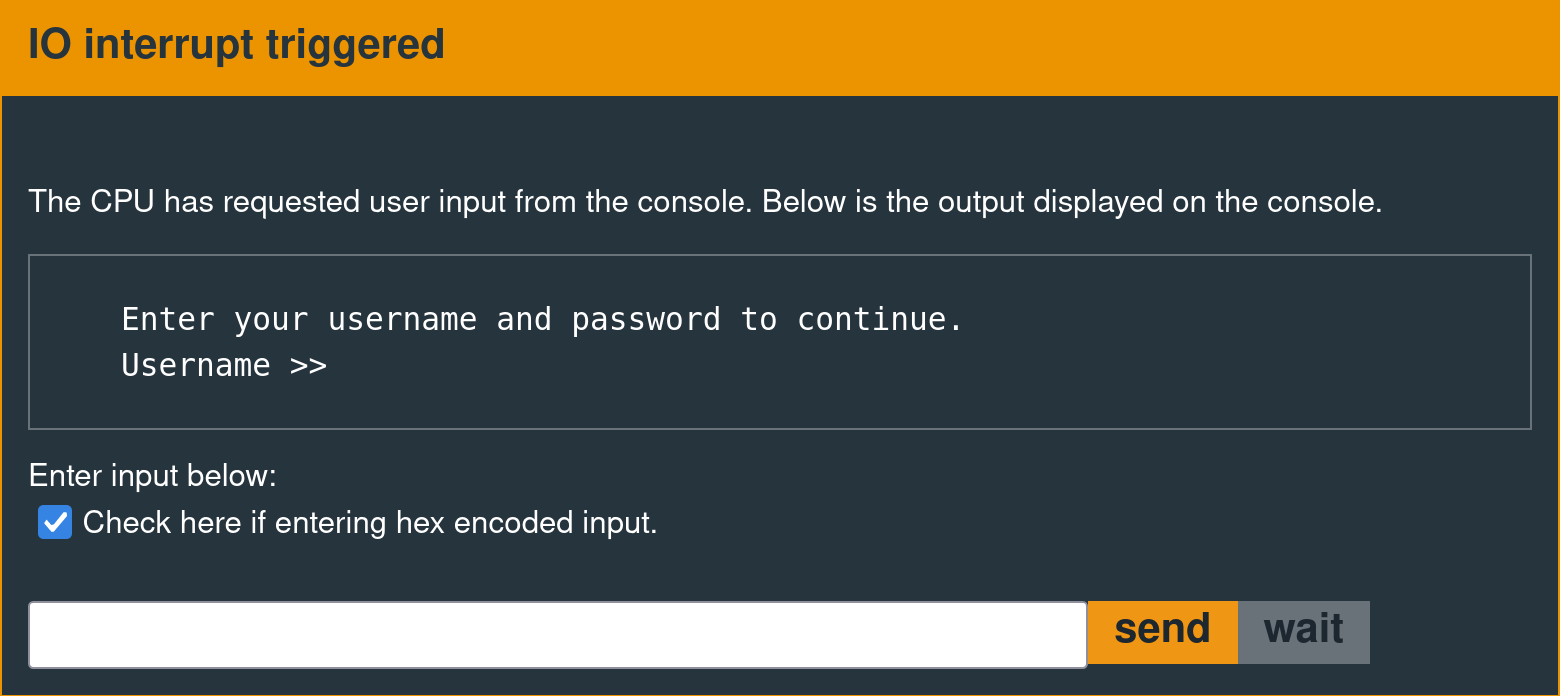

A user input prompt like the following is the device's external communication interface.

Exploit Development Objective

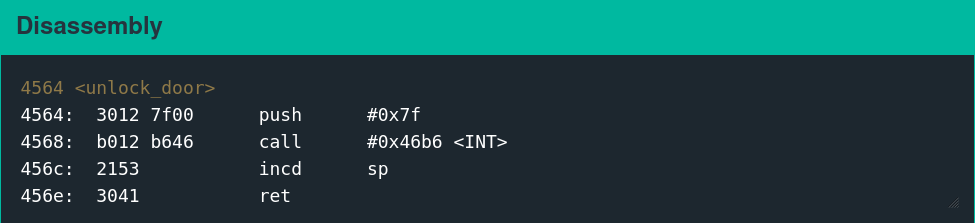

The equivalent of popping a shell on this system is calling interrupt 0x7F. On earlier challenges in the series, there is a dedicated function called unlock_door that does this.

Executing the following shellcode is functionally equivalent to calling the unlock_door function.

Disassembly

3240 00ff mov #0xff00, sr

b012 1000 call #0x10

Assembly

324000ffb0121000The following message is displayed in the interface when the interrupt is called successfully.

High-Level Analysis

This version implements ed25519-based signature verification for any code provided as a debug payload.

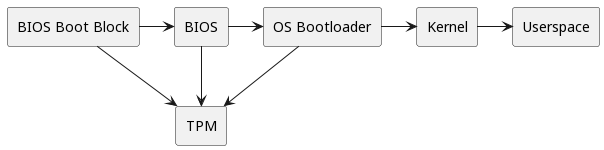

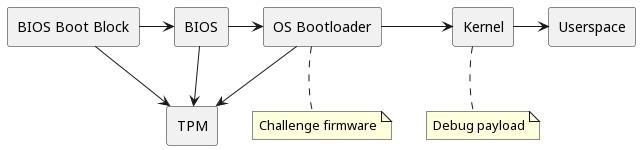

TPM Based Secure Boot Implementation on x86

The debug payload is conceptually similar to a signed bootloader payload, so having context about how secure boot works is helpful. Consider the x86 secure boot implementation for the sake of reference. Wikipedia provides a decent overview, but low-level implementation details are left vague. Specific implementations enforce secure boot through several mechanisms, but x86 platforms usually use a TPM. The following graph explains this process:

A typical hardware TPM is a physical chip on the motherboard that is hardened against relatively sophisticated hardware attacks and connected to the processor via a low-speed bus. The BIOS boot block is located in a ROM chip and is physically impossible to alter via a software attack. This chip contains the first code that runs after turning on the system. As a simplified explanation, the BIOS boot block code will take a cryptographic checksum of the BIOS code (the section executed next) and send the resulting hash to the TPM. If the hash for the BIOS code does not match the one saved in the TPM, it will respond with a message informing the BIOS boot block that it should halt the boot process. This challenge-response protocol prevents attackers from modifying the BIOS code to insert malicious functionality.

The BIOS code executes, provided its checksum is on the TPM's whitelist. It then takes a checksum of the OS bootloader, sends it to the TPM, and the cycle repeats.

Each code block is responsible for taking a checksum of the next one and sending it to the TPM for verification, forming a "chain of trust" that can prove no malicious alteration of the boot code occurred. This process only works if the "root of trust" (i.e., the BIOS boot block) is practically impossible to alter and if there are no firmware vulnerabilities in subsequent stages.

A reasonable assumption is that the "debug payload" is somewhat analogous to a kernel, and the goal is to replace it with a malicious version that circumvents the platform integrity checks implemented in the functionality that is analogous to an OS bootloader (the challenge firmware). Alternately, it might be one layer back (i.e., the firmware is similar to a BIOS, and the debug payload is the OS bootloader). Either way, these challenges involve exploiting a vulnerability in the previous stage to break the chain of trust.

Sample Payload

The example payload format is as follows.

800000063041f23630084d78f18b0ef369693ebdb5eaf1290b3cb4a69815345a0de53b9bb6cc7de3c46159a7af7c91c28a3d3691309822290d9c6482fefc03cbbcff35ce9708

Sample Output

Running the continue command in the debugger with no breakpoints produces the following output at the I/O console.

Welcome to the secure program loader.

Please enter debug payload.

Payload Breakdown

The first several bytes of the debug payload are similar to Vancouver.

800000063041f23630084d78f18b0ef369693ebdb5eaf1290b3cb4a69815345a0de53b9bb6cc7de3c46159a7af7c91c28a3d3691309822290d9c6482fefc03cbbcff35ce9708Load Address

The first two bytes are 0x8000 (big-endian). These bytes are identical to the load address from Vancouver, so it is reasonable to assume they serve the same purpose here.

800000063041f23630084d78f18b0ef369693ebdb5eaf1290b3cb4a69815345a0de53b9bb6cc7de3c46159a7af7c91c28a3d3691309822290d9c6482fefc03cbbcff35ce9708Executable Segment

Similarly to Vancouver, there is also a distinguishable RET instruction (0x3041), which is likely part of the executable code segment.

800000063041f23630084d78f18b0ef369693ebdb5eaf1290b3cb4a69815345a0de53b9bb6cc7de3c46159a7af7c91c28a3d3691309822290d9c6482fefc03cbbcff35ce9708Executable Segment Size

A low, single-byte value (0x6) precedes the RET instruction—most likely the executable segment size.

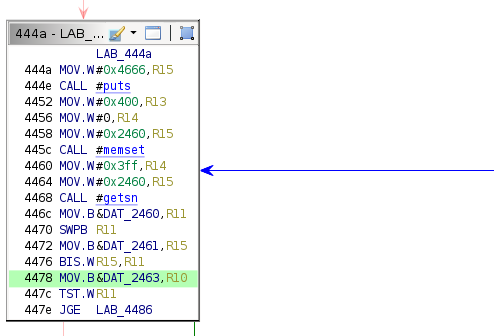

800000063041f23630084d78f18b0ef369693ebdb5eaf1290b3cb4a69815345a0de53b9bb6cc7de3c46159a7af7c91c28a3d3691309822290d9c6482fefc03cbbcff35ce9708This hypothesis is supported by inspecting the following disassembly from the main function.

Aside from the change to the getsn destination address, this is almost identical to the equivalent section from Vancouver. The above code interprets the 0x6 value as a byte rather than a word—exactly how the previous firmware version parsed the size field.

Unknown Byte

There is a null byte of unknown import preceding the size field. It is not immediately apparent what this byte does.

800000063041f23630084d78f18b0ef369693ebdb5eaf1290b3cb4a69815345a0de53b9bb6cc7de3c46159a7af7c91c28a3d3691309822290d9c6482fefc03cbbcff35ce9708Signature

Because the first obvious instruction is a RET, execution does not reach any subsequent code. The implication is that all of the following data is a digital signature.

800000063041f23630084d78f18b0ef369693ebdb5eaf1290b3cb4a69815345a0de53b9bb6cc7de3c46159a7af7c91c28a3d3691309822290d9c6482fefc03cbbcff35ce9708Dynamic Analysis

Executing the above example payload will print the following message to the console.

Signature valid, executing payloadBreaking at address 0x8000 shortly after the getsn function call and continuing confirms that execution reaches the RET instruction at address 0x8000.

Much like in Vancouver, the sample debug payload will immediately return, and the main function will loop. The inferred payload structure is thus as follows:

| Load Address | Unknown | Size (Bytes) | Executable code | Signature |

|---|---|---|---|---|

8000 |

00 |

06 |

3041 (RET) |

f23630084d78f18b0... |

Unintended Instructions

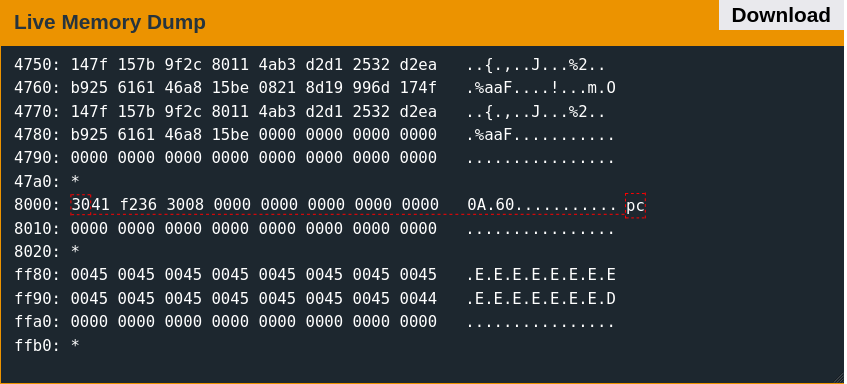

Interestingly, the executable segment size is too large. This results in four bytes of the signature Ascertaining where the executable code ends and the signature begins is difficult because of this error. It becomes obvious why the demarcation is at this offset after understanding how large an ed25519 signature must be. being copied and appended to the end of the executable code.

8000: 3041 f236 3008 0000 0000 0000 0000 0000 0A.60...........

8010: 0000 0000 0000 0000 0000 0000 0000 0000 ................

This bug could theoretically allow the interpretation of the signature bytes as code. These extra bytes disassemble into the following instructions.

| Opcode | Disassembly |

|---|---|

f236 |

jge $-0x21a |

3008 |

(Invalid instruction) |

This strange behavior is not immediately useful. Using the JGE instruction as a ROP gadget is theoretically possible, but that would first require redirecting the execution flow. Normal execution never reaches that conditional jump because the program executes the RET instruction first.

Signature Verification Testing

The first step is to ensure that signature verification works properly and understand which parts of the payload are signed, accomplished by flipping bits in each of the four documented fields.

Load Address

900000063041f23630084d78f18b0ef369693ebdb5eaf1290b3cb4a69815345a0de53b9bb6cc7de3c46159a7af7c91c28a3d3691309822290d9c6482fefc03cbbcff35ce9708Executable Segment Size

800000083041f23630084d78f18b0ef369693ebdb5eaf1290b3cb4a69815345a0de53b9bb6cc7de3c46159a7af7c91c28a3d3691309822290d9c6482fefc03cbbcff35ce9708Executable Segment

800000064041f23630084d78f18b0ef369693ebdb5eaf1290b3cb4a69815345a0de53b9bb6cc7de3c46159a7af7c91c28a3d3691309822290d9c6482fefc03cbbcff35ce9708Signature

800000063041ff3630084d78f18b0ef369693ebdb5eaf1290b3cb4a69815345a0de53b9bb6cc7de3c46159a7af7c91c28a3d3691309822290d9c6482fefc03cbbcff35ce9708Changing the first byte of the load address, size, executable code, or the signature will fail with the following error.

Incorrect signature, continuing

Please enter debug payload.

Based on this, it is reasonable to assume the entire payload is signed and thus unalterable, suggesting that there is either a vulnerability in the ed25519 implementation, a logic flaw that allows for bypassing signature verification, or a memory safety issue that is exploitable using only the signed payload.

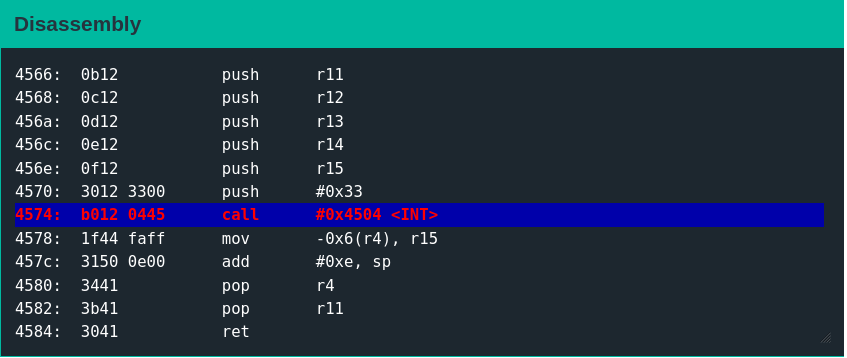

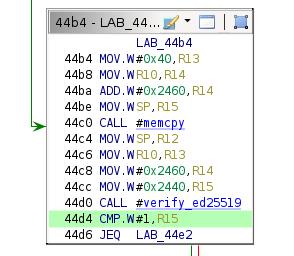

The disassembly for the verify_ed25519 function is as follows.

4552 <verify_ed25519>

4552: push r11

4554: push r4

4556: mov sp, r4

4558: add #0x4, r4

455a: decd sp

455c: clr -0x6(r4)

4560: mov #0xfffa, r11

4564: add r4, r11

4566: push r11

4568: push r12

456a: push r13

456c: push r14

456e: push r15

4570: push #0x33

4574: call #0x4504 <INT>

4578: mov -0x6(r4), r15

457c: add #0xe, sp

4580: pop r4

4582: pop r11

4584: ret

The code in this function suggests that ed25519 signature verification on this platform uses a custom, undocumented hardware interrupt: 0x33. Even if there was a vulnerability in the ed25519 implementation, there is no way to analyze it directly.

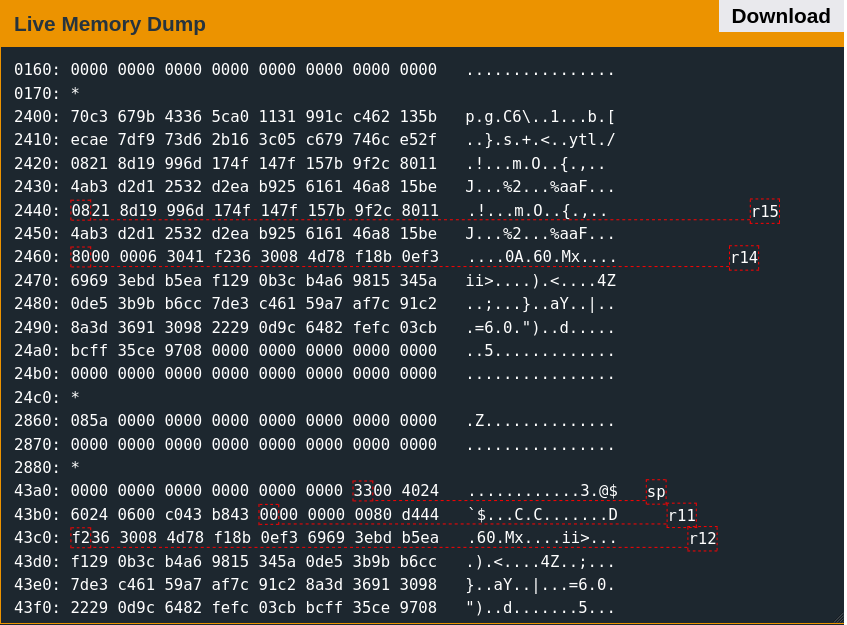

Static Analysis

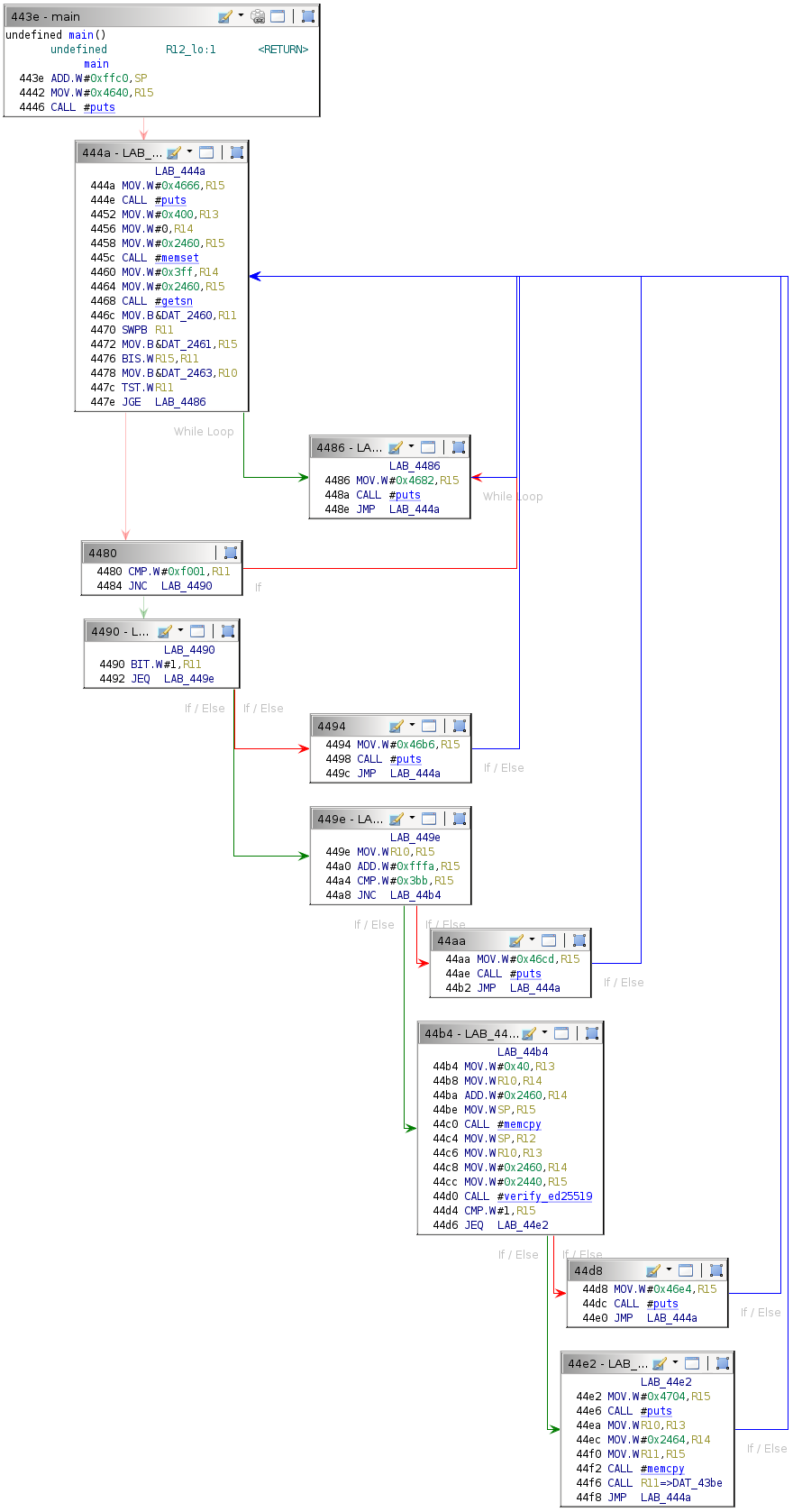

The static analysis process can begin after downloading a memory snapshot via the debugger interface, importing the binary into Ghidra, disassembling from the entrypoint at 0x4400, and renaming functions. The following is the Ghidra function graph (flow control) for the main function.

The program prints various status messages by calling puts. Examining the string that each conditional block prints allows for conjecture about its purpose.

| String Address | String | Address of Conditional Block Containing Referencing Code |

|---|---|---|

| 4640 | "Welcome to the secure program loader." | 443e |

| 4666 | "Please enter debug payload." | 444a |

| 4682 | "Load address outside allowed range of 0x8000-0xF000" | 4486 |

| 46b6 | "Load address unaligned" | 4494 |

| 46cd | "Invalid payload length" | 44aa |

| 46e4 | "Incorrect signature, continuing" | 44d8 |

| 4704 | "Signature valid, executing payload" | 44e2 |

Most of the status messages are early termination conditions for the main loop. It is worth experimentally verifying that all of these work as designed.

Input #1

700000063041f23630084d78f18b0ef369693ebdb5eaf1290b3cb4a69815345a0de53b9bb6cc7de3c46159a7af7c91c28a3d3691309822290d9c6482fefc03cbbcff35ce9708Output

Load address outside allowed range of 0x8000-0xF000Input #2

f20000063041f23630084d78f18b0ef369693ebdb5eaf1290b3cb4a69815345a0de53b9bb6cc7de3c46159a7af7c91c28a3d3691309822290d9c6482fefc03cbbcff35ce9708Output

Load address outside allowed range of 0x8000-0xF000Input #3

800100063041f23630084d78f18b0ef369693ebdb5eaf1290b3cb4a69815345a0de53b9bb6cc7de3c46159a7af7c91c28a3d3691309822290d9c6482fefc03cbbcff35ce9708Output

Load address unalignedInput #4

800000013041f23630084d78f18b0ef369693ebdb5eaf1290b3cb4a69815345a0de53b9bb6cc7de3c46159a7af7c91c28a3d3691309822290d9c6482fefc03cbbcff35ce9708Output

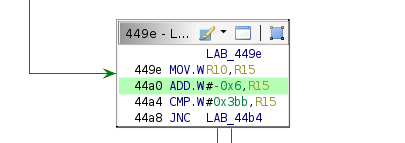

Invalid payload lengthAll of these checks seem to work correctly. It is worth noting that the incorrect size field is due to a conditional jump in block 0x449e, which prevents it from being set below six.

Convert the size integer (at address 0x44a0) to a signed 16-bit integer in the Ghidra display for easier readability.

Because the code is short (2 bytes), this causes copying of a chunk of the signature along with it. It is also worth noting that the third byte in the payload seems to be completely unused.

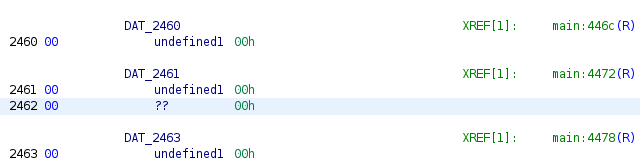

800000063041f23630084d78f18b0ef369693ebdb5eaf1290b3cb4a69815345a0de53b9bb6cc7de3c46159a7af7c91c28a3d3691309822290d9c6482fefc03cbbcff35ce9708Observing the lack of XREFs to that specific memory location confirms this fact.

Attack Surface Analysis

There is no obvious way to bypass the signature verification check. There are only nulls in the 0x8000-0xF000 range, which seems to rule out memory corruption as a viable option and suggest the existence of a flaw in the logic surrounding the ed25519 implementation. In order to exploit such a flaw, it is necessary to understand the calling convention and parameters for a generic ed25519 signature verification function.

Because this algorithm is somewhat similar to DSA, a naive approach would be to assume the algorithm was misused (i.e., reused nonces were left littered in the firmware). However, this is impossible with ed25519 because it uses a digest of the message as a nonce.

Calling Convention for ed25519

A good starting point is the Wikipedia article on EdDSA, which has a section devoted to ed25519. It includes basic information about cryptographic key sizes: public keys are 256 bits (32 bytes) long, and signatures are 512 bits (64 bytes) long. The data at the end of the debug payload (after the two-byte RET instruction) is exactly 64 bytes long, which suggests that it is a signature.

Another reference is the wiki page for the Crypto++ library, which includes an example of one potential calling convention. First, the code loads public keys from persistent storage.

FileSource fs2("public.key.bin", true);

ed25519::Verifier verifier;

verifier.AccessPublicKey().Load(fs2);

valid = verifier.GetPublicKey().Validate(prng, 3);

if (valid == false)

throw std::runtime_error("Invalid public key");

std::cout << "Keys are valid" << std::endl;

Message verification then takes pointers to the message and signature, along with sizes for both.

valid = verifier.VerifyMessage((const byte*)&message[0], message.size(),

(const byte*)&signature[0], signature.size());

if (valid == false)

throw std::runtime_error("Invalid signature over message");

std::cout << "Verified signature over message\n" << std::endl;

The above example implementation suggests that a generic function for verifying ed25519 signatures takes the following parameters.

- Public key (32 bytes)

- Message

- Message size

- Signature

- Signature size

The ed25519_verify function passes arguments in registers R11 through R15. The number of registers matches the parameter count used by the Crypto++ implementation.

pc 4574 sp 43ac sr 0001 cg 0000

r04 43be r05 5a08 r06 0000 r07 0000

r08 0000 r09 0000 r10 0006 r11 43b8

r12 43c0 r13 0006 r14 2460 r15 2440

These five registers reference two general blocks of memory: the stack and an area just after 0x2400 (probably the data section).

Based on the ed25519 Wikipedia description, it is reasonable to assume that R15 is a pointer to the public key. This data is exactly 32 bytes, as the 33rd would overlap the beginning of the debug payload (which is stored just after it in memory). R14 contains a pointer to the debug payload (the message). R13 is the message size. R12 points to the signature (copied to the stack previously).

┌───────────────────────────────────────────────┐

│ DATA SECTION │

├───────┬───────────────────────────────────────┤

│ADDRESS│ DATA │

├───────┼───────────────────────────────────────┤

│ 2400 │70c3 679b 4336 5ca0 1131 991c c462 135b│

├───────┼───────────────────────────────────────┤

│ 2410 │ecae 7df9 73d6 2b16 3c05 c679 746c e52f│

├───────┼───────────────────────────────────────┤

│ 2420 │0821 8d19 996d 174f 147f 157b 9f2c 8011│

├───────┼───────────────────────────────────────┤

│ 2430 │4ab3 d2d1 2532 d2ea b925 6161 46a8 15be│

├───────┼───────────────────────────────────────┤ ┌───┬──────────┐

│ 2440 │0821 8d19 996d 174f 147f 157b 9f2c 8011│◄─┤R15│PUBLIC KEY│

├───────┼───────────────────────────────────────┤ └───┴──────────┘

│ 2450 │4ab3 d2d1 2532 d2ea b925 6161 46a8 15be│

├───────┼───────────────────────────────────────┤ ┌───┬───────┐

│ 2460 │8000 0006 3041 f236 3008 4d78 f18b 0ef3│◄─┤R14│MESSAGE│

├───────┼───────────────────────────────────────┤ └───┴───────┘

│ 2470 │6969 3ebd b5ea f129 0b3c b4a6 9815 345a│

├───────┼───────────────────────────────────────┤ ┌───┬────────────┐

│ 2480 │0de5 3b9b b6cc 7de3 c461 59a7 af7c 91c2│ │R13│MESSAGE SIZE│

├───────┼───────────────────────────────────────┤ ├───┴────────────┤

│ 2490 │8a3d 3691 3098 2229 0d9c 6482 fefc 03cb│ │ 0006 │

├───────┼───────────────────────────────────────┤ └────────────────┘

│ 24a0 │bcff 35ce 9708 0000 0000 0000 0000 0000│

├───────┼───────────────────────────────────────┤

│ 24b0 │0000 0000 0000 0000 0000 0000 0000 0000│

└───────┴───────────────────────────────────────┘

┌───────────────────────────────────────────────┐

│ STACK │

├───────┬───────────────────────────────────────┤

│ADDRESS│ DATA │

├───────┼───────────────────────────────────────┤ ┌───┐

│ 43b0 │6024 0600 c043 b843 0000 0000 0080 d444│◄─┤R11│

├───────┼───────────────────────────────────────┤ ├───┼─────────┐

│ 43c0 │f236 3008 4d78 f18b 0ef3 6969 3ebd b5ea│◄─┤R12│SIGNATURE│

├───────┼───────────────────────────────────────┤ └───┴─────────┘

│ 43d0 │f129 0b3c b4a6 9815 345a 0de5 3b9b b6cc│

├───────┼───────────────────────────────────────┤

│ 43e0 │7de3 c461 59a7 af7c 91c2 8a3d 3691 3098│

├───────┼───────────────────────────────────────┤

│ 43f0 │2229 0d9c 6482 fefc 03cb bcff 35ce 9708│

└───────┴───────────────────────────────────────┘

The stack address R11 points to contains a null word. The value is too big to be a size. Given that the Wikipedia page implies that all signatures are 64 bytes, the signature size is likely hardcoded—and thus unnecessary to pass as a parameter.

The word at the memory location pointed to by R11 is always zero before the 0x33 interrupt call, but it is set to 0x1 and loaded into R15 if signature verification is successful.

┌───────────────────────────────────────────────┐

│ STACK │

├───────┬───────────────────────────────────────┤

│ADDRESS│ DATA │

├───────┼───────────────────────────────────────┤ ┌───┐

│ 43b0 │6024 0600 c043 b843 0000 0000 0080 d444│◄─┤R11│

└───────┴────────────────────┬──────────────────┘ └───┘

│

▼ CALL

┌──────────────┐

│ed25519_verify│

└──────┬───────┘

│

▼ SUCCESS

┌───────┬───────────────────────────────────────┐ ┌───┐

│ 43b0 │6024 0600 c043 b843 0001 0000 0080 d444│◄─┤R11│

└───────┴───────────────────────────────────────┘ └───┘

Testing using a known invalid payload results in this word remaining zero.

┌───────────────────────────────────────────────┐

│ STACK │

├───────┬───────────────────────────────────────┤

│ADDRESS│ DATA │

├───────┼───────────────────────────────────────┤ ┌───┐

│ 43b0 │6024 0600 c043 b843 0000 0000 0080 d444│◄─┤R11│

└───────┴────────────────────┬──────────────────┘ └───┘

│

▼ CALL

┌──────────────┐

│ed25519_verify│

└──────┬───────┘

│

▼ FAILURE

┌───────┬───────────────────────────────────────┐ ┌───┐

│ 43b0 │6024 0600 c043 b843 0000 0000 0080 d444│◄─┤R11│

└───────┴───────────────────────────────────────┘ └───┘

ed25519_verify returns a boolean status code to indicate either success or failure in signature verification. This behavior is also identifiable by looking at the comparison at 0x44d4, where the firmware uses the contents of R15 to determine whether to print the "Incorrect signature, continuing" status message.

Implementation-Specific Calling Convention

The conjectured calling convention for this specific implementation of ed25519 is detailed below.

| Register | Description | Type |

|---|---|---|

| r11 | Status code | volatile int * |

| r12 | Signature | int * |

| r13 | Message size | int |

| r14 | Message | char * |

| r15 | Public key | int * |

Identifying Key Material Leakage

Tampering with the status code returned by ed25519_verify does not seem possible. There is also nothing about the parameters which suggest the implementation is flawed. That said, understanding the calling convention is helpful because it grants insight into the contents of the memory regions described above. Nothing resides in the stack memory above the signature location nor the area below the debug payload. Interestingly, 64 bytes of data above the public key are seemingly unused by the program. Given the proximity of this blob to other data used by ed25519_verify, it is reasonable to suspect that some of it might be a private key.

┌───────────────────────────────────────────────┐

│ DATA SECTION │

├───────┬───────────────────────────────────────┤

│ADDRESS│ DATA │

├───────┼───────────────────────────────────────┤ ┌───────────┐

│ 2400 │70c3 679b 4336 5ca0 1131 991c c462 135b│◄─┤PRIVATE KEY│

├───────┼───────────────────────────────────────┤ └───────────┘

│ 2410 │ecae 7df9 73d6 2b16 3c05 c679 746c e52f│

├───────┼───────────────────────────────────────┤

│ 2420 │0821 8d19 996d 174f 147f 157b 9f2c 8011│

├───────┼───────────────────────────────────────┤

│ 2430 │4ab3 d2d1 2532 d2ea b925 6161 46a8 15be│

├───────┼───────────────────────────────────────┤

│ 2440 │0821 8d19 996d 174f 147f 157b 9f2c 8011│

├───────┼───────────────────────────────────────┤

│ 2450 │4ab3 d2d1 2532 d2ea b925 6161 46a8 15be│

├───────┼───────────────────────────────────────┤

│ 2460 │8000 0006 3041 f236 3008 4d78 f18b 0ef3│

├───────┼───────────────────────────────────────┤

│ 2470 │6969 3ebd b5ea f129 0b3c b4a6 9815 345a│

├───────┼───────────────────────────────────────┤

│ 2480 │0de5 3b9b b6cc 7de3 c461 59a7 af7c 91c2│

├───────┼───────────────────────────────────────┤

│ 2490 │8a3d 3691 3098 2229 0d9c 6482 fefc 03cb│

├───────┼───────────────────────────────────────┤

│ 24a0 │bcff 35ce 9708 0000 0000 0000 0000 0000│

├───────┼───────────────────────────────────────┤

│ 24b0 │0000 0000 0000 0000 0000 0000 0000 0000│

└───────┴───────────────────────────────────────┘

Malicious Code Signing Attempt

Verifying this assumption is relatively straightforward.

- Insert an extra byte before the size field in the payload from Vancouver

- Sign the payload using the suspected private key

- Append the resulting signature to the end

- Submit the debug payload

If the signing process works I.e., the data is a valid, uncorrupted ed25519 private key. and the data format is correct, this should yield arbitrary code execution. There are multiple utilities to handle the ed25519 signing. The simplest option is using a site like cyphr.me.

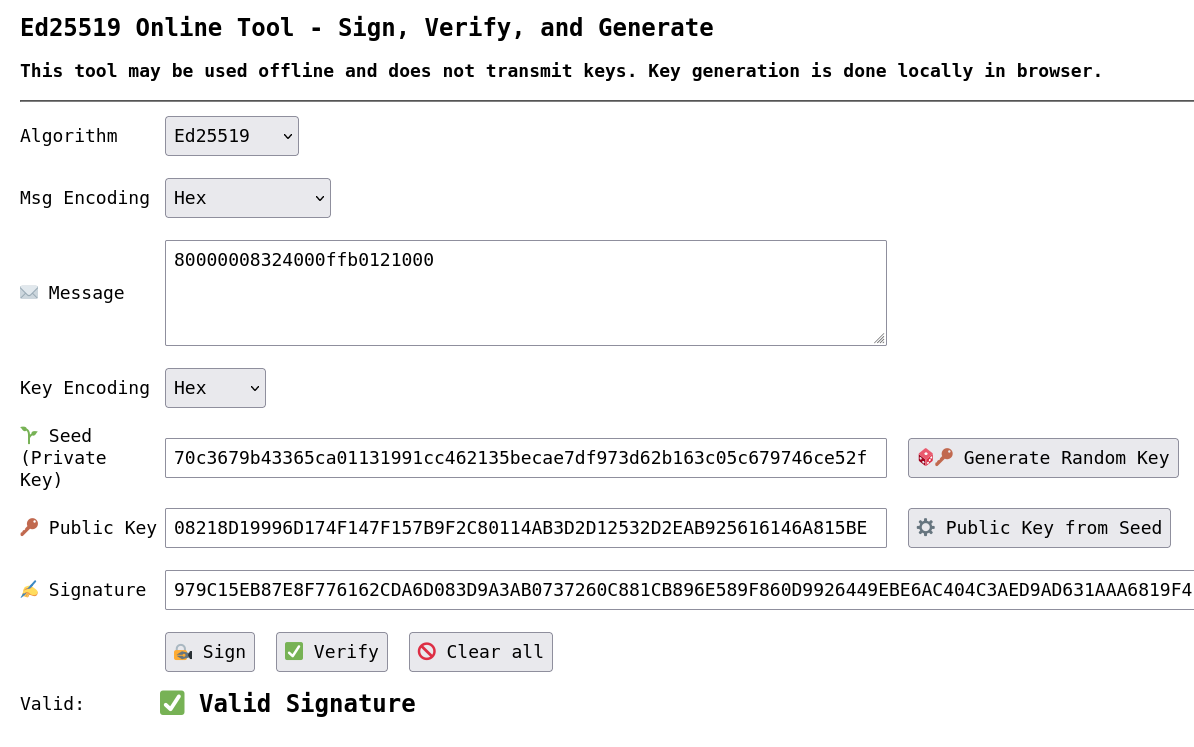

Generating a private key using the above site provides an example of the format it expects. Private keys are only 32 bytes, so if the theory is accurate, the key could start at any offset between address 0x2400 and 0x2420. The 32 bytes beginning at 0x2400 are assumed to be the private key for initial testing.

Payload

| Load Address | Unknown | Size (Bytes) | Executable code |

|---|---|---|---|

8000 |

00 |

08 |

3240 00ff b012 1000 |

Raw Payload

The raw payload bytes to be signed are as follows.

80000008324000ffb0121000Signature

This data produces the following signature. Set the "msg encoding" parameter on the cyphr.me site to hex before signing.

979C15EB87E8F776162CDA6D083D9A3AB0737260C881CB896E589F860D9926449EBE6AC404C3AED9AD631AAA6819F411A29ADE1B74F7F67CE917588715268F01Final Payload

The final payload is the concatenation of the raw payload and the signature.

80000008324000ffb0121000979C15EB87E8F776162CDA6D083D9A3AB0737260C881CB896E589F860D9926449EBE6AC404C3AED9AD631AAA6819F411A29ADE1B74F7F67CE917588715268F01Signature Verification Failure

Unfortunately, this does not work, and signature verification fails at runtime.

Welcome to the secure program loader.

Please enter debug payload.

Incorrect signature, continuing

Please enter debug payload.

At first glance, the obvious conclusion is that the extracted data is not the private key. One approach would be to try every starting offset between 0x2400 and 0x2420 as the private key, but there is a better solution. Unlike DSA, the ed25519 algorithm is deterministic: given the same exact inputs, it should produce the same output. The simplest way to determine whether any given data is the correct private key is to attempt to sign the first eight bytes of the example payload with it and see if the resulting signature matches.

The example payload (sans signature) is as follows.

800000063041When signed with the 32 bytes of data starting at 0x2400, it produces the following signature:

F23630084D78F18B0EF369693EBDB5EAF1290B3CB4A69815345A0DE53B9BB6CC7DE3C46159A7AF7C91C28A3D3691309822290D9C6482FEFC03CBBCFF35CE9708The above hex string matches the signature in the example payload, confirming that the first 32 bytes at address 0x2400 are the private key and suggesting an unrelated issue with the final exploit.

Debugging

The size field is the glaring difference between the example payload and the new payload containing the shellcode.

800000063041f23630084d78f18b0ef369693ebdb5eaf1290b3cb4a69815345a0de53b9bb6cc7de3c46159a7af7c91c28a3d3691309822290d9c6482fefc03cbbcff35ce970880000008324000ffb0121000979C15EB87E8F776162CDA6D083D9A3AB0737260C881CB896E589F860D9926449EBE6AC404C3AED9AD631AAA6819F411A29ADE1B74F7F67CE917588715268F01The example payload size field is 6, which is 4 bytes larger than the length of the executable code section. Six bytes is also the total length of the payload (including the load address, the unknown byte, and the size field). One theory might be that the format changed from Vancouver to St. John's, and the size is now the total length rather than just the length of the executable code section.

Arbitrary Code Execution

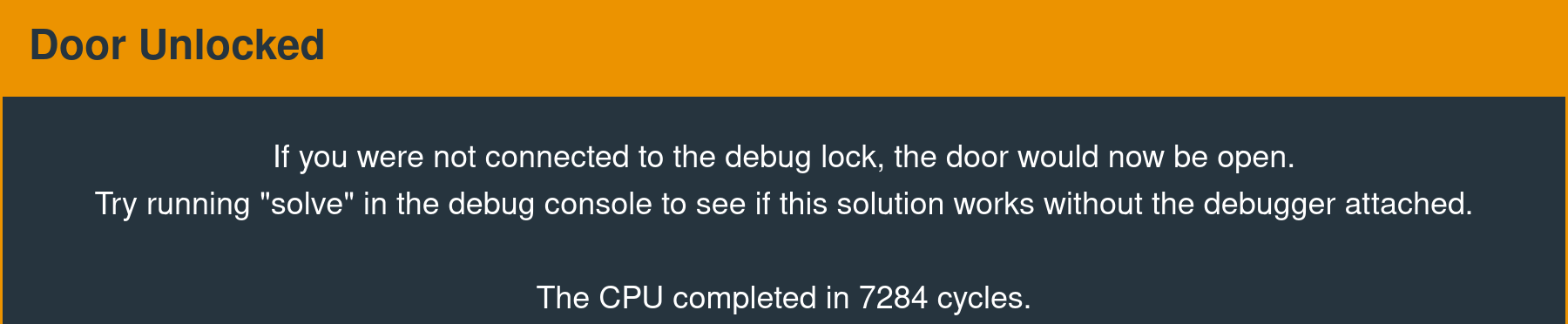

Adding four to the size field and re-signing produces the following payload.

8000000c324000ffb0121000483249ADDE744F491DAED26FFC723E08257D2B210A20177B1556C8F04D0D80B861453D737ED08AE10165CDF40B37DA23967A266F605ECFA9490B9C664682EC0BProviding this payload at the input prompt produces the following output at the I/O console.

Signature valid, executing payloadThe payload triggers the unlock interrupt, demonstrating successful arbitrary code execution. Oddly, this still results in four bytes of the signature tacked on to the end of the executable code at address 0x8000, which seems to be a bug in the implementation. Regardless, it does not affect the viability of the final attack.

| Load Address | Unknown | Size (Bytes) | Executable code |

|---|---|---|---|

8000 |

00 |

0c |

3240 00ff b012 1000 |

Door Unlocked

The CPU completed in 21663 cycles.

Remediation

Except for the developers leaking the debug payload signing key by embedding it in the firmware, the soundness of the implementation is not in question. The signature verification mechanism works perfectly well and improves on the Vancouver implementation.

This variety of key leakage is typically more of a bureaucratic than a technical failure.

- Developers should not have access to release signing keys if at all possible.

- There should be a dedicated process for signing builds

- It should be isolated from the rest of the network (ideally occurring on an air-gapped system)

- There should only be specific personnel with access to the signing system

- There should be an approval process to verify that release builds use release signing keys

A dedicated workstation with disk encryption or an HSM may work as a release signing system. While it may be tempting to blame developers for these problems, the real issue is the lack of security controls in the organization. Developers cannot leak signing keys that they do not have access to in the first place.